No Ifs, Ands or Bots. Bot Use Disclosure Now Mandatory In California.

As anyone who shops online knows, many sellers and service providers offer messaging options that allow visitors to ask questions and interact with the site. Often, the “person” they’re communicating with isn’t real (although it often seems human – “Hi! I’m Tina! Can I help you?”).

It’s a chatbot, a type of bot that simulates conversations online. Other types of bots on the Internet crawl search engine and identify infringing content.

So, why should influencers care? Because they offer and sell things online. They may also use bots to push out content on social and interact with followers.

As of July 1, a new California law (starting at CA Bus. & Prof Code sec. 17940) requires clear and conspicuous disclosures when bots are used to communicate or interact online with people in California. It’s now unlawful for any person to use a bot to communicate or interact online with someone in California “with the intent to deceive the person about its artificial identity”, for purposes soliciting a commercial transaction (such as to get them to purchase or sell goods or services).

In a legal context, “knowingly” often means not knowing about or ignoring the law. Failing to disclose a bot could itself be an intent to deceive under the new law.

Unfortunately, this new law doesn’t say how bot-disclosures should be made. The Federal Trade Commission (“FTC”) Act, the same law that applies to influences disclosing endorsements of brands, products, and services, gives some general guidance about disclosures.

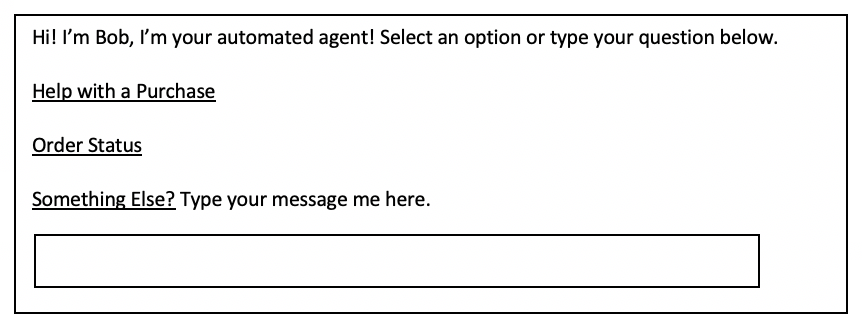

Based on that guidance, I think a best practice and one that should comply with this new law is to conspicuously and clearly state that a bot you’re using is a bot. Here’s an example:

A disclaimer like “Hi! I’m Bob! I’m not a person” may not comply.

Penalties for violating this law could include up to six months in prison and/or fines up to $2,500 per violation (!).

So, avoid problems with this new law. Say if it’s a bot or not. Just Sayin . . .TM. (By the way, I’m not a bot).